All the latest news and updates from San Jose

Refresh

These are some of the Instinct MI350 Series partners, which will have products coming to market with the chips in Q3 2025, including Oracle, Dell Technologies, HPE, Cisco, and Asus.

(Image credit: Jaen McCallion/Future)

Yu Jin Song explains that MI350 will become a key part of the company’s AI infrastructure (it’s already using MI300x accelerators).

“We’re also quite excited about the capabilities of MI350x,” he says. “It brings significantly more compute power and acceleration memory and support for FP4, FP6 all while maintaining the safety pull factor at mi 300 so we can deploy quickly.”

“We’re seeing critical advancements that started with [inferencing[ and now extending to all of your AI offering,” says Yu Jin Song. “I think AMD and Meta have always been strongly aligned on … neural net solutions.”

“We try to shoot problems together, and we’re able to deploy optimized systems at school. So our team really see AMD as a strategic and responsive partner and someone that we really like. Well, we love working with your engineering team. We know that we love the feedback, and you also hold the high standard. You are one of our earliest partners in AI,” he adds.

And now another guest: Yu Jin Song, Meta VP of engineering – Meta, of course, being the force behind Llama LLM.

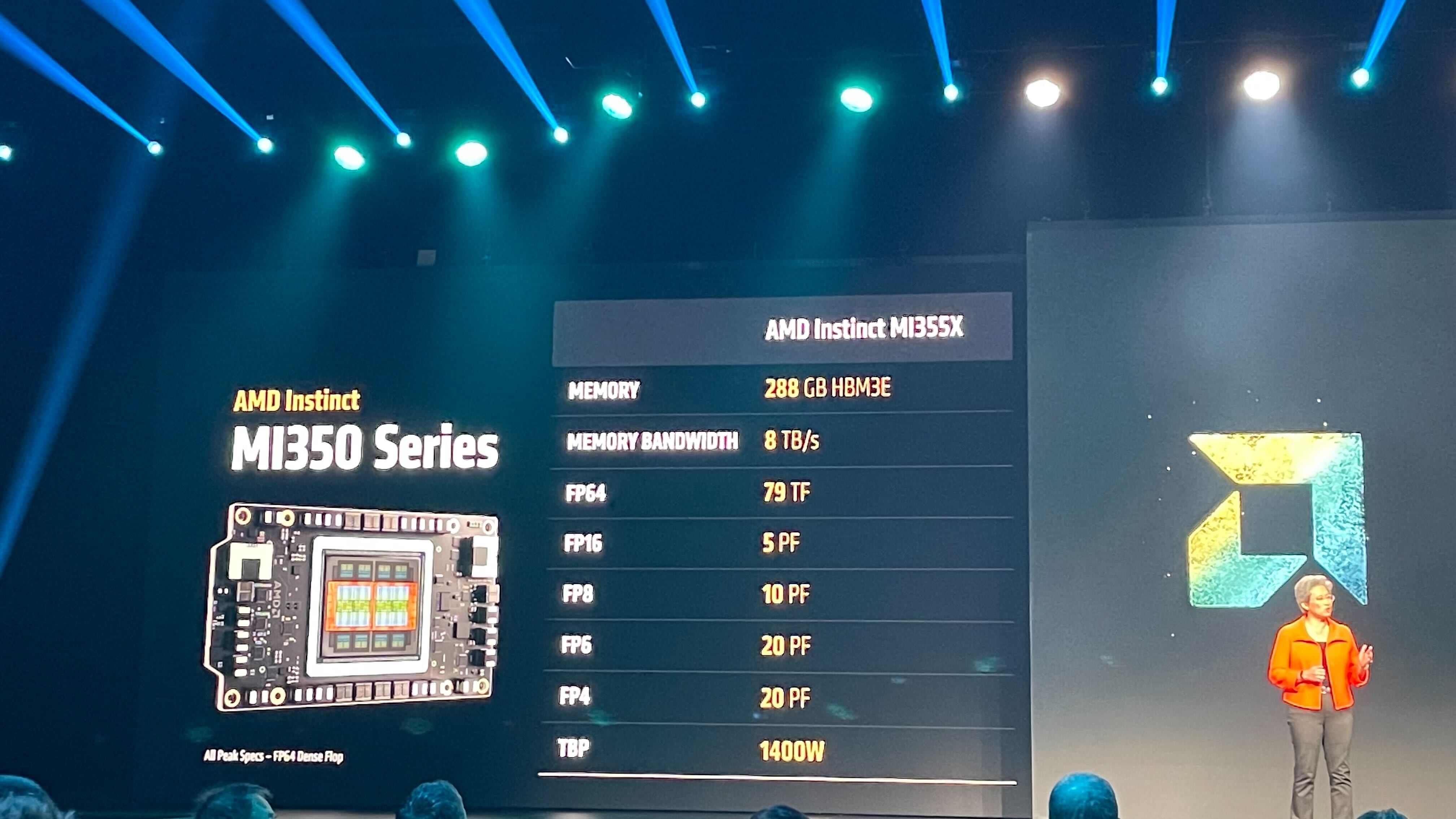

Su claims that the MI350 Series “delivers a massive 4x generation leap in AI compute to accelerate both training and inference”. Some of the key claimed specs are:

- 288GB memory

- Running up to 20 billion paramaters on a single GPU

- Double the FP throughput compared to competitors

- 1.6x more memory than competitors

(These are AMD claims that haven’t been independently verified by ITPro)

(Image credit: Jane McCallion/Future)

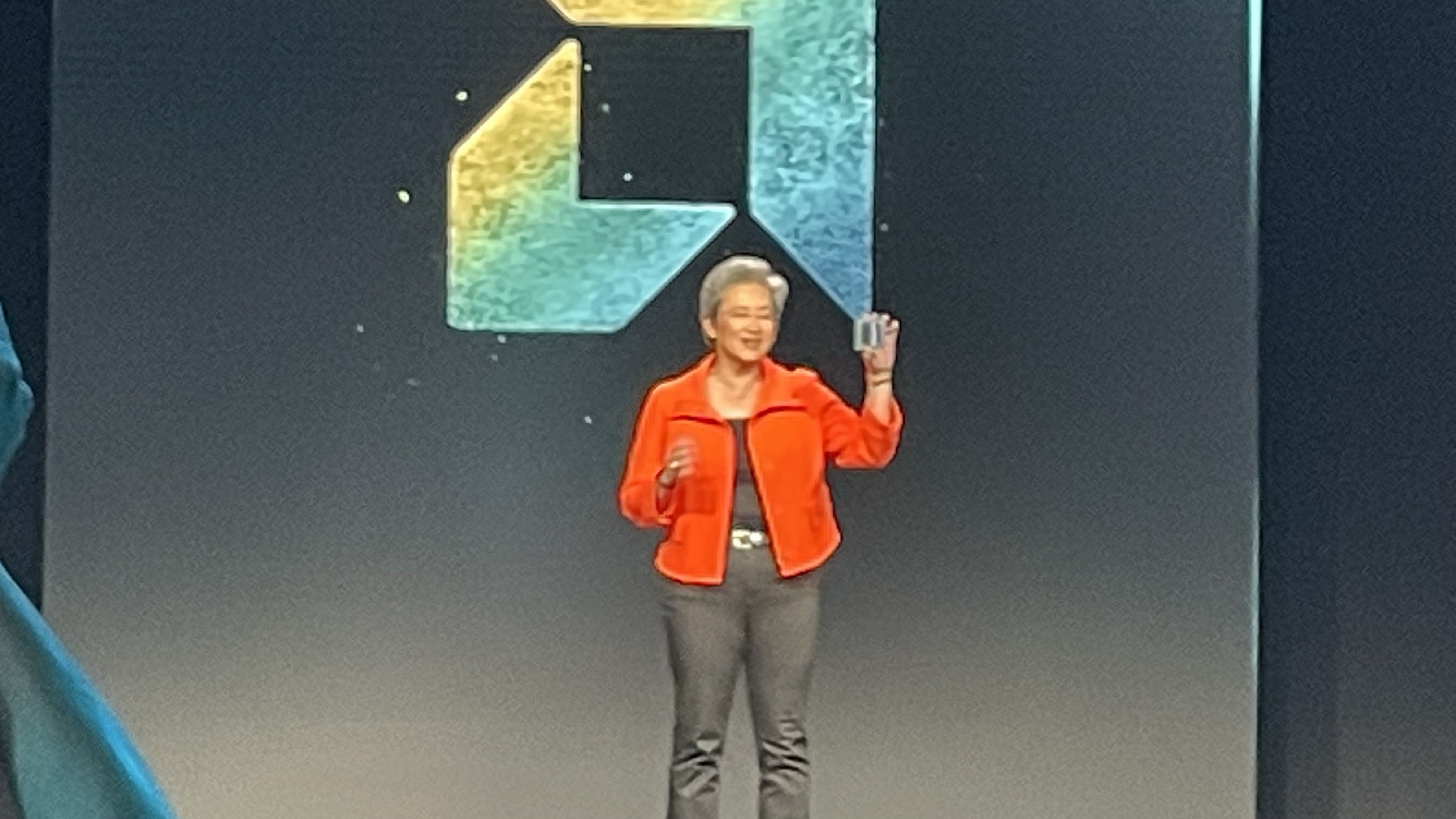

Here’s Su showing off the MI350 series on stage

(Image credit: Jane McCallion/Future)

“With the Mi 350 series, we’re delivering the largest generational performance lead in the history of instinct, and we’re already deep in development of Mi 400 for 2026,” says Su.

“Today, I’m super excited to launch the Mi 350 series, our most advanced AI platform ever that delivers leadership performance across the most demanding models this series,” she says, adding that while AMD will talk about the MI355 and the MI350 “they’re actually the same silicon, but MI355 supports higher thermals and power envelopes so that we can even deliver more real world performance.”

Sun leaves the stage and now we have our first product announcement, the AMD Instinct MI350 Series.

Sun focuses on how his small team is being bolstered by AMD hardware like MI300x and – of course – openness

Su is now joined on stage by her first guest, Xia Sun of xAI, to talk about how the company behind Grok is working with AMD.

“Linux surpassed Unix as a data center operating system of choice when global collaboration was unlocked,” she continues. “Android’s open platform helped scale mobile computing to billions of users, and in each case, openness delivered more competition, faster innovation and eventually a better outcome for users. And that’s why for us at AMD, and frankly, for us as an industry, openness shouldn’t be just a buzzword. It’s actually critical to how we accelerate the scale adoption and impact of AI over the coming years.”

Su hits on another theme that’s becoming popular among many enterprise hardware providers: open source and openness.

“AMD is the only company committed to openness across hardware, software and solutions,” Su claims. “The history of our industry shows us that time and time again, innovation truly takes off when things are open.”

AI isn’t just a cloud or data center question now, it’s also an endpoint question, and particularly the PC. “We expect to see AI deployed in every single device,” she says.

As with many companies now – including partner Dell and rival Nvidia – AMD is talking up AI agents and its (positive) impact on hardware.

Su says that agentic AI represents “a new class of user”.

“what we’re actually seeing is we’re adding the equivalent of billions of new virtual users to the global compute infrastructure. All of these agents are here to help us, and that requires lots of GPUs and lots of CPUs working together in an open ecosystem,” she says.

And here is the woman of the hour, Dr Lisa Su, CEO of AMD.

“I’m always incredibly proud to say that billions of people use AMD technology every day, whether you’re talking about services like Microsoft Office 365 or Facebook or zoom or Netflix or Uber or Salesforce or SAP and many more you’re running on AMD infrastructure,” says Su. “In AI, the biggest cloud and AI companies are using instinct to power their latest models and new production workloads. And there’s a ton of new innovation that’s going on with the new AI startups.”

(Image credit: Jane McCallion/Future)

And we are underway with an opening video – apparently the key for the AI future is trust.

The auditorium is starting to fill up at the San Jose McEnery Convention Center as we wait for Lisa Su to take to the stage for her keynote address this morning.

(Image credit: Jane McCallion/Future)

(Image credit: Jane McCallion/Future)

With a little under two hours to go before Lisa Su’s keynote starts, it’s worth reflecting on what happened at the company’s last Advancing AI conference, which only took place eight months ago in October 2024.

At that event, Su launched the enterprise-focused Ryzen AI Pro 300 Series CPU range, revealed the Pensando Selina data processing unit, and announced the arrival of Instinct MI325x accelerators. It’s unlikely that the company has invited customers, partners, and press to hear updates on these products, but it could indicate the direction of travel.

At Computex in June 2024 Su announced the company was moving to an annual release cadence for its GPUs and with the launch of the Instinct MI325x accelerator series at the last Advancing AI we could see an update in this area.

Source link