Apple Has Lost Its Way with Apple Intelligence

Apple has long been a company that has embraced the latest trends with a slight pause, almost as if they’re waiting for the thing to be well built-out, before incorporating it into its products. This has been the case with most advancements in smartphones, for example. Dual cameras, OLED screens, high refresh rates, and almost everything else that Apple hailed as innovation, was only brought to its products after other brands had implemented it into their products.

Sure, this strategy makes sense — let the others do the market research, take the risks of bringing a nascent technology to consumers, and spend millions figuring it out. And once it’s matured enough, add it to the next generation of iPhones and call it a day, albeit a massively succesfull one if you’re counting by the amount of dollars it rakes in.

The Race for AI in Smartphones

Of course, that meant that it was not going to be Apple, who first introduced full-fledged AI features into its smartphones or its operating systems. And it wasn’t; not by a long shot.

Other smartphone makers kept incorporating more and more AI features into their smartphones over the years. We got Magic Eraser, which wasn’t always perfect, but it was quite impressive nonetheless and it only got better over time. We got the weird photo features in the Pixel that let us take group selfies without leaving one person out of the frame. We got Best Take. There’s just a lot of AI features that were implemented into smartphones before Apple made its move.

All that, as I said, was expected. However, it also meant that I, at least, expected Apple’s implementation of AI to be something that makes me use the thing, or at least want to use it.

So far, it does neither.

Apple Intelligence: AI for the Rest of Us Or AI for the Sake of It?

When WWDC 2024 arrived, I was excited by the prospect of new Apple software updates and features. This, in itself, is nothing new. I am perpetually caught in a cycle of telling myself I wouldn’t install the Developer Betas this year and then caving in and installing them the moment they release. It’s a side-effect of both my love for technology, and the field of work in which I am involved, and it’s not a side-effect I dislike even a little bit.

Taking my interest even further was the anticipation of hearing about Apple Intelligence — a much awaited foray into AI for Apple. To be fair, the company did a stellar job presenting the thing. It was well set up, and the examples shared by various Apple executives seemed to be interesting and impressive, if not entirely mind-blowing.

The examples were all about how Apple Intelligence knows your personal context and works accordingly, blending in the background and doing the work for you so you don’t have to. It was AI, the way I have always wanted it to be — invisible, potent, and available for handling the mundane tasks, and the things you would usually forget.

Apple Intelligence is ALMOST Here

Now, I know that Apple Intelligence isn’t completely here yet, and there are things that will be coming sometime in 2025. And that’s my first gripe with it — Apple Intelligence was clearly not ready for prime time when Apple announced it. Sure, they started rolling out some features in the betas, and by now we have a lot available in the stable build as well. However, it didn’t feel like an Apple rollout at all this time around.

Usually, the new iPhones are launched, and the stable iOS update is released in time for the iPhone series. And usually the new iOS update comes with all its features. Usually. This time around, iOS 18 came out with absolutely no AI features. Which meant the iPhone 16 series was a brand new phone without its most talked about feature. Sure, the Camera Button was a new thing as well, but that’s a whole other rant for some other day.

The truth is, Apple Intelligence was the reason for most people to upgrade to the iPhone 16 series, and it was just. too. late.

It’s still late, by the way, and we don’t know exactly when it will be fully available. And what’s more, this is not even the worst of it.

So Far Apple Intelligence is Old Wine in a New Bottle

Okay, so that analogy might not work too well since a lot of old wines would actually be amazing if I could get my hands on them. However, as the saying goes, it does explain what Apple Intelligence feels like to me, at least so far as it has been made available to us.

First, there are the features. Broadly speaking, I would classify the AI features in iOS 18 into five categories:

- Things we’ve already seen, used, and stopped using

- Useful features that don’t work well

- Novelties

- Some actually good things

- “Coming Soon”

Let me explain.

The Already Seen, Used, and Forgotten

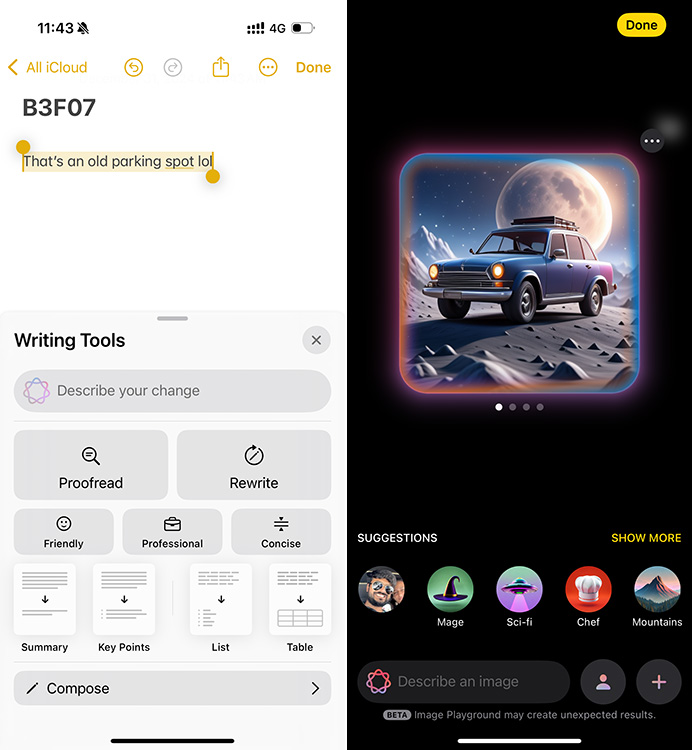

There are two main features that come under this category so far — Writing tools, and Image Playground.

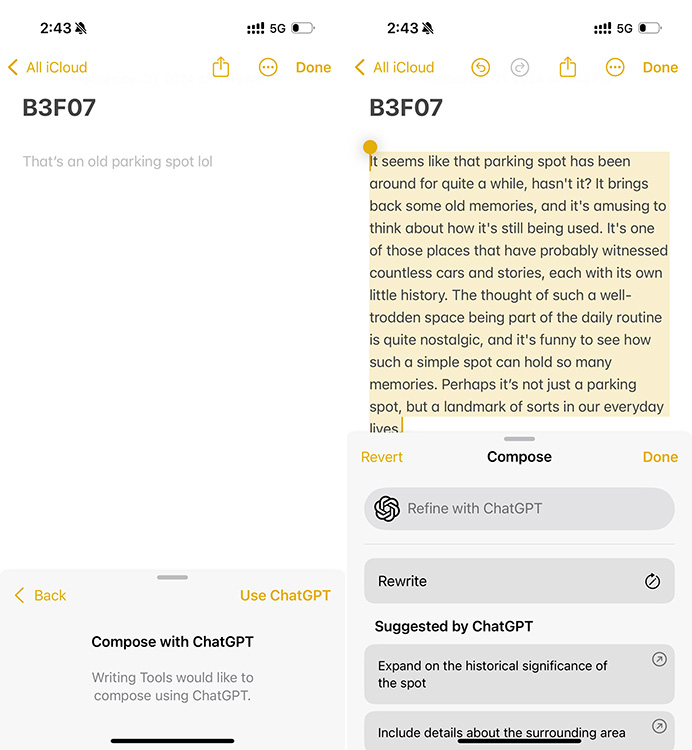

Writing tools is something that has the potential to be useful, I can see it every time I try to use Writing Tools to rephrase something or get the key pointers out of a block of sample text. However, it’s nothing exceptional, and it’s definitely nothing new. There are countless online and on-device AI tools that do exactly this. ChatGPT does it. In fact, if you want to use Apple’s writing tools to do anything more than a very basic rephrasing of your words, Apple does usually end up asking you permission to send over your text to ChatGPT to get the desired results.

Image Playground is basically DALL-E, or Stable Diffusion, or any of the other AI image generation apps and tools that we’ve seen over the last couple of years. If anything, Image Playground is more limited. You can’t create realistic images, for one thing. No matter how much you try, Apple Intelligence will simply not let you create realistic images.

One argument for this is to prevent the generation of deepfakes, which I can understand, especially since I have previously written about how easy it is to generate realistic looking AI images these days. However, Image Playground won’t let you create a realistic-looking image of a car, a landscape, or even a tree. Nothing can be realistic. It’s either cartoonish, a sketch, or something else entirely — anything but realistic.

Image Playground also lends itself to the Image Wand feature on the iPad and iPhone, and while it works, it doesn’t really work well. For one thing, anything you draw in the Notes app and then use the Image Wand on will undoubtedly come out looking like a sketch. Usually, it’s at least a good looking sketch, but it’s always a sketch. Sure. Whatever.

However, Image Wand also entirely screws up at times. That’s not something I expected from Apple Intelligence, because Apple, being Apple, is usually good at implementing features in a way that they just work. Simply, and often intuitively. Not Image Playground, though.

Useful Features that Don’t Work Well

Sure, Writing Tools and Image Playground were always going to be more of a feature that you’d use once in a while when really required, and otherwise not really bother with. I get that. And iOS 18 actually does bring some really useful AI features. Or at least, features that had the potential to be really useful.

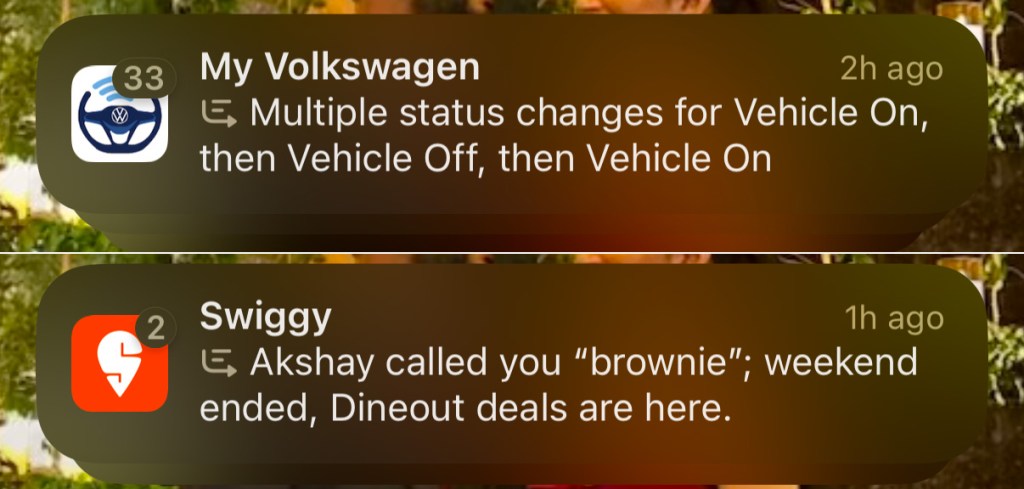

First up, Notification Summaries. Look, I get a lot of notifications throughout my day, and while a lot of them are potentially useless ramblings from certain friends and coworkers (looking at you, Sagnik, and Upanishad), some messages, even from them, are actually useful. What’s more, since most of these ramblings are on Slack, they can sometimes bury out actually important messages from our Editor (now you know why I sometimes miss your messages, Anmol, it’s these two guys. Do with that information what you will).

Notification Summaries, therefore, felt like the savior I was looking for.

Unfortunately, Apple Intelligence is very stupid when it comes to summarising notifications. It does hilarious things sometimes, and a lot of times, it ends up completely messing up the context.

Then, there are the Smart Replies. These show up just above the keyboard based on your conversation, but they aren’t very… intelligent. Okay, to be fair, the options it shows up usually make sense, but they aren’t the kinds of things you’d normally say. Even weirder is that the Smart Replies feature works quite well in the Mail app, where it even shows follow-up questions to further fill out the response, but it doesn’t do so in iMessage.

Third, and last, is the Clean Up tool. This is another new addition to the Photos app, and it’s basically Magic Eraser, but gone wrong. It does work on the easy stuff, but it’s nowhere near what you’d get with Google’s Magic Eraser. That, makes it a feature that I’d not use very often, especially since Magic Eraser is available on the iPhone with the Google Photos app anyway.

Novelties

One thing I have noticed with smartphone brands bringing AI features to their devices, is that they always incorporate some AI features that look really cool and are fun to play around with, but aren’t the kind of thing you would actually use. Novelties, in other words.

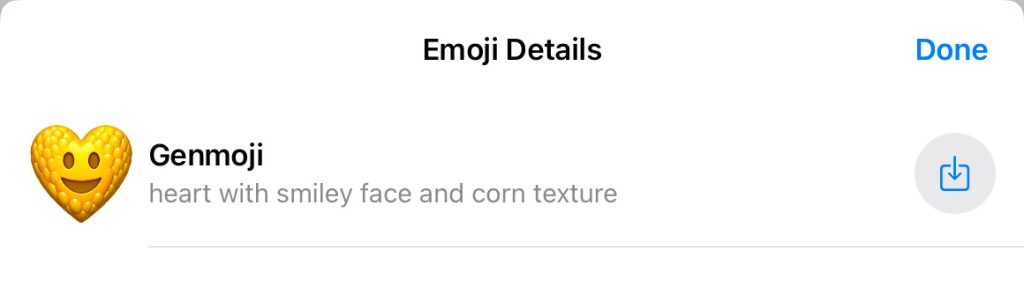

Apple Intelligence has these as well. Particularly, I’m talking about the Genmoji feature.

Credit where it’s due, Genmoji works really well. And usually, you can get the emoji you want to make by describing it in simple terms. That does mean you get the ability to send custom emojis to your friends, and since these are added as “stickers” in your keyboard, you can actually use them outside of iMessage too, including on WhatsApp.

That’s great. However, after the initial few days of trying out Genmoji and creating a few, I have neither created new ones, nor have I found myself reusing the ones I created.

Features like these are great to get people talking about the cool new thing. Not everyone is always keeping them up-to-date with all the features in a new software update, and having people talk about “did you see this cool new emoji I made with AI” is a clever way of ensuring more people find out about the feature, and are pulled into wanting to buy the new iPhone that supports Apple Intelligence.

At the end of the day, however, whether you buy the iPhone 16 for Apple Intelligence or not, the truth is you’ll not really use Genmoji beyond a couple of days. But for Apple, Genmoji will have done its job — getting more people excited about Apple Intelligence, and making more people want AI on their iPhone.

Some Actually Good Things

Having Apple Intelligence on your iPhone isn’t going to fundamentally change the way you use your device. That much is obvious, and I would actively ask people not to upgrade to the latest iPhone if they’re doing it solely for the AI features. However, Apple Intelligence does have some good things as well.

The call recording feature is really good. It’s something that Android phones have been doing for years, and something for which iPhone users have had to rely on third-party iPhone call recording apps.

Now, you can just tap the call recording icon on your call screen and start recording any call. That’s not exactly “AI”, and in fact, the feature is available on phones that don’t support Apple Intelligence as well. What the iPhones compatible with Apple Intelligence do bring to the table, in related to call recording, is call summaries.

These show up in your Notes app, and you can quickly view a summary of your entire call here. I have yet to find a situation where I wanted a summary of a recorded call, but that’s just me. I’m sure loads of people regularly have conversations that they not only want to record, but also summarised for a quick overview, later.

Coming Soon

Speaking of “later”, there are still more features that aren’t even here yet. As I have mentioned earlier, Apple Intelligence was clearly not ready for prime time when Apple announced it, and nothing makes this more evident than the fact that we’re six months into iOS 18 having been announced and almost 3 months since the stable public build was released, and a lot of Apple Intelligence is still “coming soon”.

Case in point, photo editing with Siri. With Apple Intelligence, you can, apparently, open up a photo, launch Siri and ask it to make edits, such as “make this photo pop!”. Right now, this doesn’t work. It simply shows a search result for an image editing app that has the word “pop” in it, or generates a reply from ChatGPT informing you how the photo can be made to pop.

There’s a lot more that’s still coming soon, and as per Apple, will roll out with software updates “in the coming months”. So there’s really no fixed timeline for it either.

Lest We Forget: ChatGPT

We also have ChatGPT integration, which in itself is actually fairly useful and can make Siri do better at certain things than it normally would. However, there’s a limit to the free ChatGPT requests you can make, or you can subscribe to ChatGPT Plus for $20 every month.

Again, credit where it’s due, Siri does tell you when it wants to share information with ChatGPT to provide you with better answers, and you can choose to share or not share information at that point. That is at least better than simply not knowing when your data is being processed on-device, on Apple’s own cloud (with Private Cloud Compute), or on OpenAI’s servers.

So far, Siri will utilise ChatGPT’s prowess if you use the “Describe Changes” feature in Writing Tools, or if you ask Siri to describe a photo. This, by the way, still isn’t available in the stable public builds for iOS 18, but it should be making its way to users soon; it is, at least, available in the beta. And ChatGPT is actually good at doing most of these tasks.

Although, I’m not entirely sure I am comfortable having my photos shared with ChatGPT in order for them to be described, but I also don’t know if that’s something the A18 Pro’s NPU is capable of doing — probably not.

Apple Intelligence Has the Potential to Be Great, but the Rollout Is Messy

Look, I don’t hate Apple Intelligence. If anything, I’m still cautiously optimistic about the thing. I am just surprised by the rather messy rollout that it has had so far. Some features are here in Developer and Public betas, some features are here in the stable iOS 18 builds, and some features are simply “coming soon.”

I expected Apple Intelligence to be here faster, if not exactly in time, for the first public release of iOS 18. I expected Apple’s implementation of AI to work better than what I have already seen from other smartphone makers. And I definitely expected the features that were here, to work better than they do. Especially Notification Summaries… god, I had such high hopes from Notification Summaries.

Source link