I put Grok vs MetaAI in a 7-round face-off — here’s the winner

Grok and MetaAI both started out as a chat interface for a social media platform but are gradually evolving into standalone tools rivaling the capabilities of ChatGPT and Gemini.

Both of the bots can generate images, write code and create compelling stories and they also both “feel” different to engage with compared to the major players like ChatGPT, Gemini and Claude, offering a more natural tone of voice and response. However, that is purely anecdotal and based on my experience, without backup from evaluations.

I’ve decided to put them to the test with a series of 7 prompts. This follows the same format I used in similar tests between ChatGPT and Gemini, ChatGPT and Claude, Claude and Gemini and with ChatGPT and Grok. As an aside, Grok is the only one who beat ChatGPT.

Creating the prompts

This test is not exhaustive. I don’t look at specifics within the image generation process, rather I create a single image from each using the same prompt. Decisions are largely subjective and based on my own taste, albeit measured against a pre-determined set of criteria.

Apart from image generation no special features of the various chatbots are used, although both have real world access so I will be looking to seee how well they handle the planning request and whether they incorporate live data in the response.

1. Image Generation

(Image: © Meta vs Grok)

First up we’re going to run the AI image generation test. Grok uses its own built-in model previously known as Aurora. Gemini is using the Google DeepMind Imagen 3 model. Neither are using native image generation, but then no public model uses that technique yet.

Here I’m asking the AI model to generate an image of a man with glasses and a beard at a messy desk. This is not autobiographical, honestly.

The prompt: “A man in his early 40s with a beard and glasses is sitting at a messy desk in a home office, looking intently at a laptop screen with a focused expression. He’s wearing a comfortable sweater and jeans. One hand is on the mouse, the other is holding a pen that he’s tapping against his chin. There are stacks of books and papers surrounding him, and a half-eaten bowl of ramen sits on a coaster beside the keyboard. Light from the setting sun streams through the window behind him, casting long shadows across the room.”

While MetaAI’s image is more engaging, it loses on realism compared to the Grok image. Grok’s image excels in lighting, atmosphere, and mood, aligning more closely with the artistic intent of the prompt.

- Winner: Grok for a more realistic image

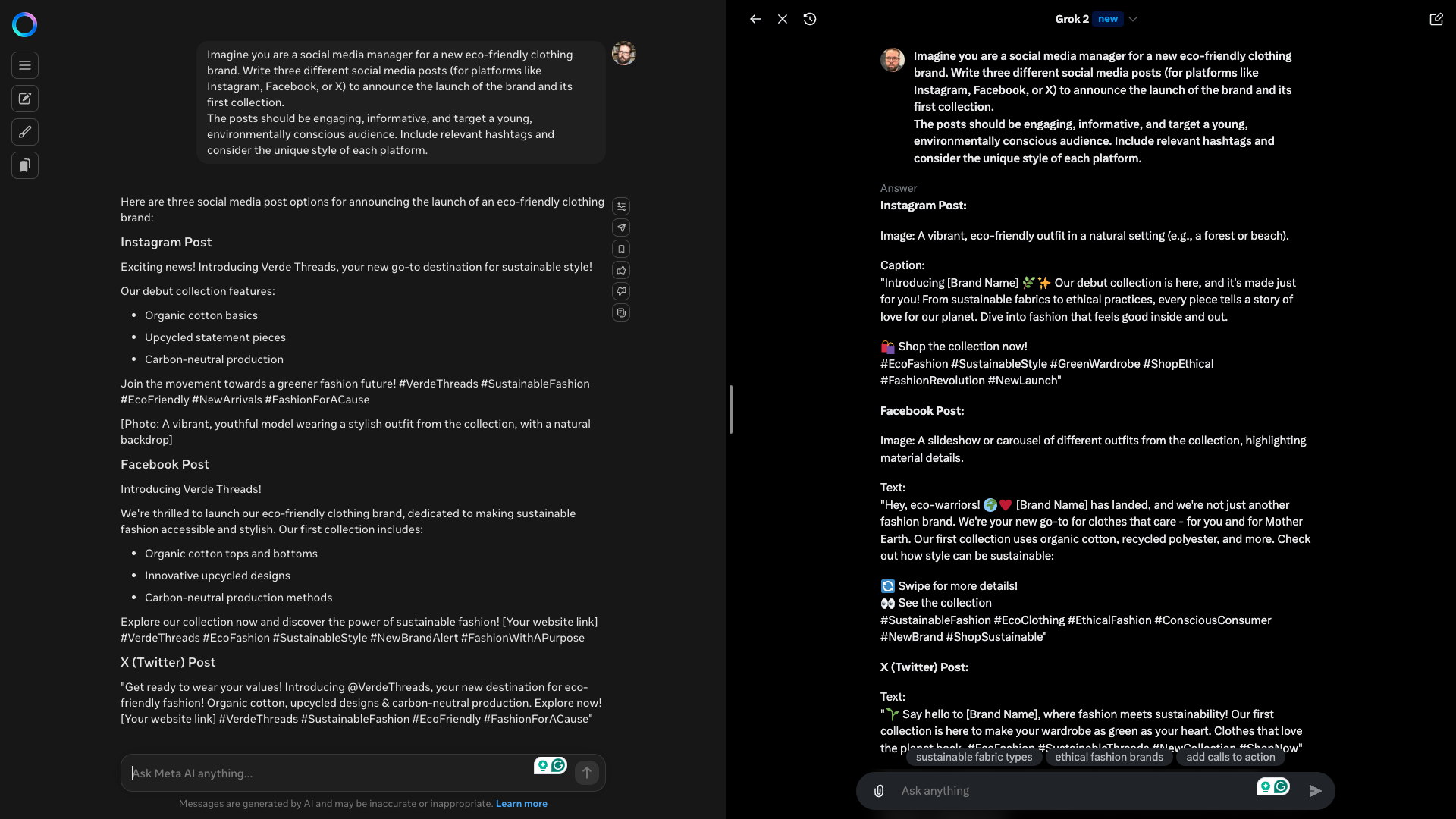

2. Social Media

(Image: © MetaAI vs Grok)

In all of my previous tests I’ve made prompt 2 about image analysis but I’m in the UK and MetaAI doesn’t have image analysis capabilities. I can’t give it a picture, so instead I’ve created a prompt playing on the social media connection.

Prompt: “Imagine you are a social media manager for a new eco-friendly clothing brand. Write three different social media posts (for platforms like Instagram, Facebook, or X) to announce the launch of the brand and its first collection.

The posts should be engaging, informative, and target a young, environmentally conscious audience. Include relevant hashtags and consider the unique style of each platform.”

Full response in a Google Doc. Grok wins this for multiple reasons, not least because it didn’t just make up a brand. It created a placeholder campaign that the user can then insert their own brand into. It also offered guidance.

- Winner: Grok for a more emotionally resonant and creative series of posts

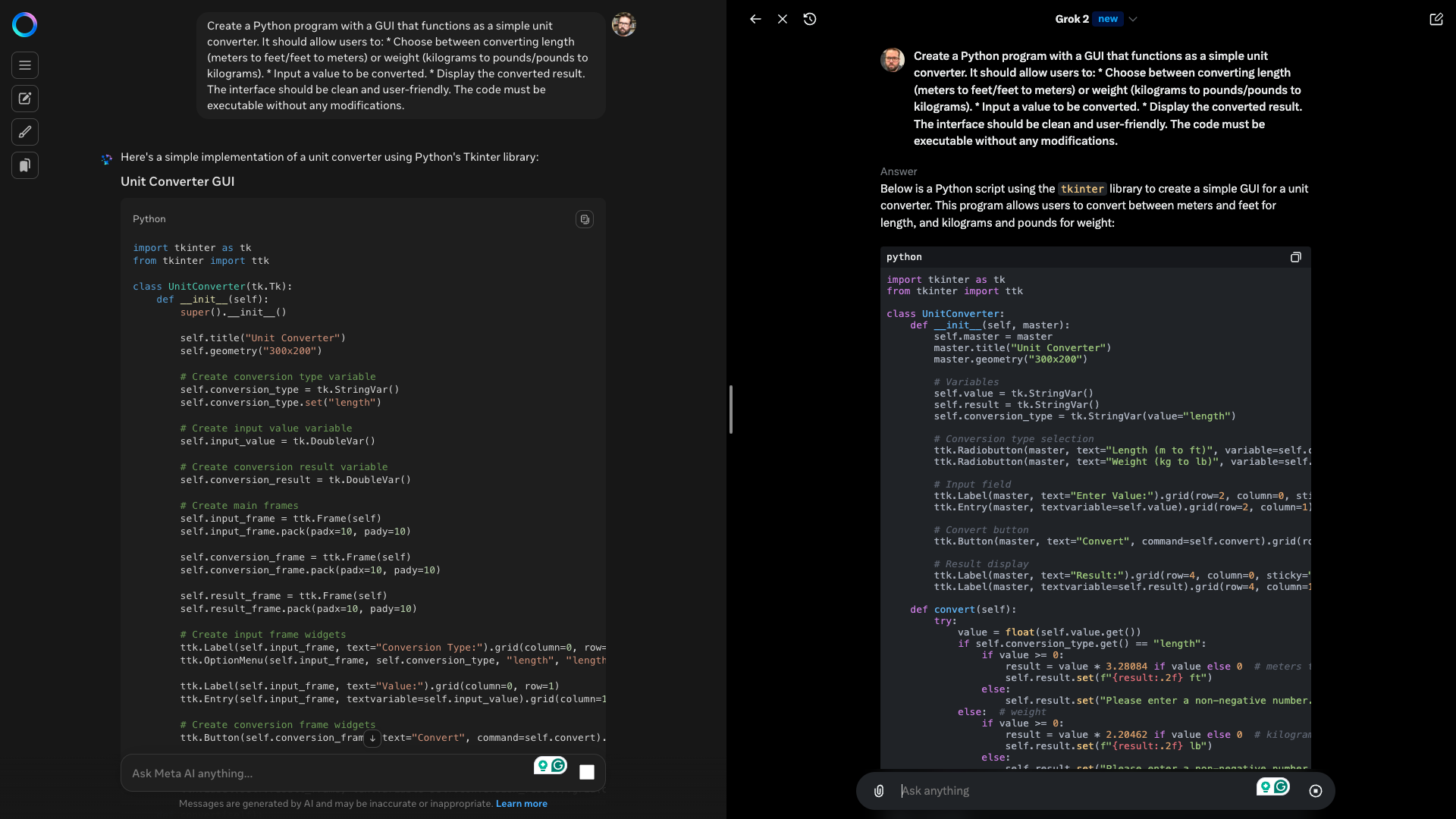

3. Coding Challenge

(Image: © MetaAI vs Grok)

In previous tests I’ve had the models create games, a to-do list app and a pomodoro timer. Here I’m getting them to create a simple convertor. In this one we’re converting length and weight.

Prompt: “Create a Python program with a GUI that functions as a simple unit converter. It should allow users to:

Choose between converting length (meters to feet/feet to meters) or weight (kilograms to pounds/pounds to kilograms).

Input a value to be converted.

Display the converted result.

The interface should be clean and user-friendly. The code must be executable without any modifications.”

Both apps were surprisingly similar and worked straight out of the box. I gave it to Grok because it actually included better labeling on the length and weight selector, although I preferred the dropdown from MetaAI.

- Winner: Grok wins for better display of units

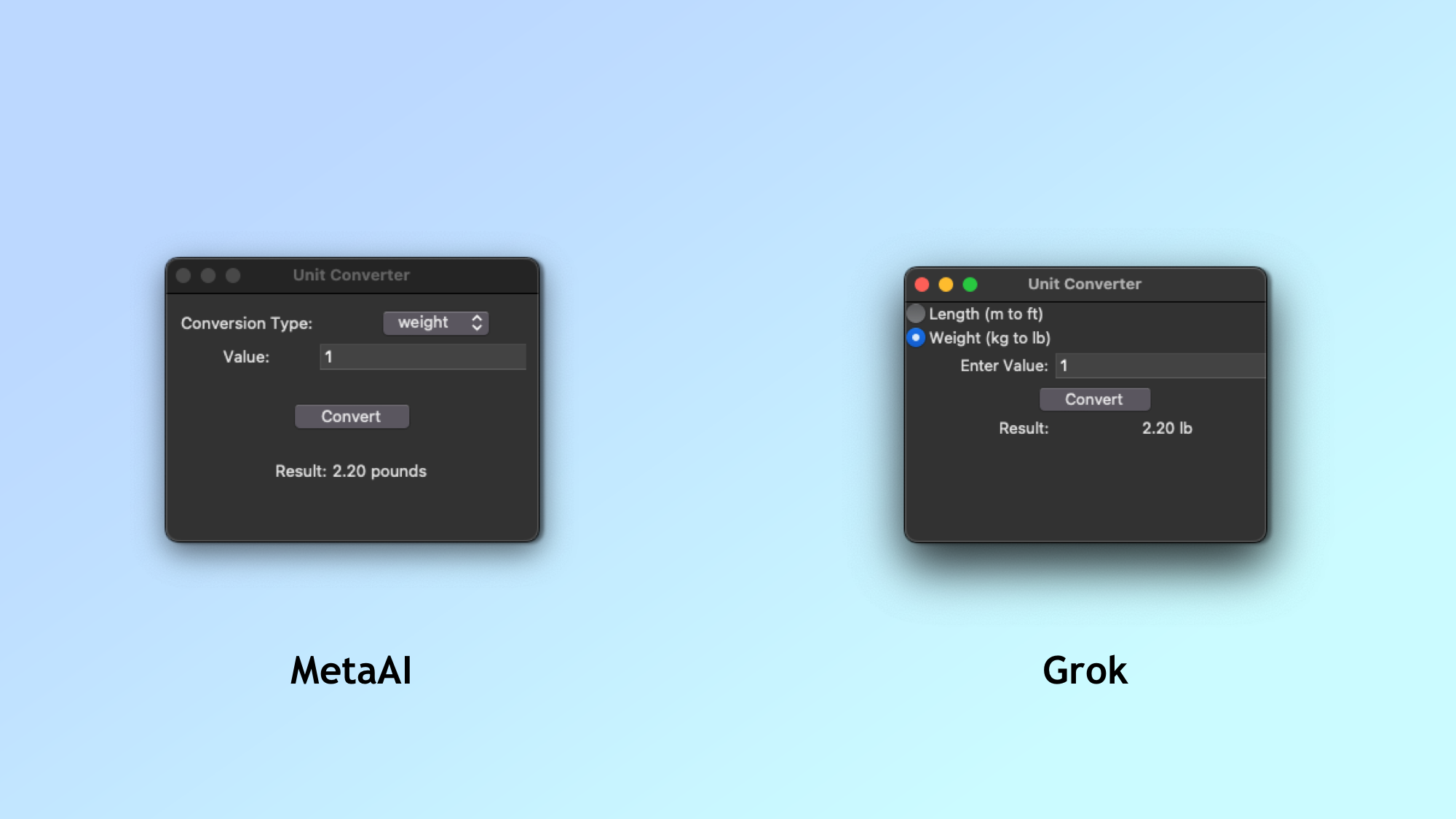

4. Creative Writing

(Image: © MetaAI vs Grok)

For challenge four we’re going to get each model to generate a short story in the style of Dr Seuss but they have to be about a young inventor creating an animal language translator.

Prompt: “Write a whimsical short story in the style of Dr. Seuss about a young inventor who creates a machine that can translate animal languages. They use it to communicate with their pet goldfish, who reveal a surprising secret about the origins of their species. The story should be filled with rhymes and imaginative creatures.”

Full report in a Google Doc. MetaAI’s response failed almost immediately for me as it used the word whimsy in the opening line. This is a little “on the nose” and a sign of a lower-quality AI model.

- Winner: Grok wins for better capturing the whimsical and absurd spirit of Dr Seuss

5. Problem Solving

(Image: © MetaAI vs Grok)

Problem-solving is something AI models can be good at, especially if they have a degree of reasoning capability. They work through the problem step-by-step and provide a solution. The challenge here is in how well they present that solution for a non-technical audience.

Prompt: “A user is having trouble connecting their wireless headphones to their laptop. They have tried turning the headphones on and off, but the problem persists. Develop a troubleshooting guide that covers common connectivity issues, including Bluetooth settings, driver updates, and potential hardware problems.”

Full response available in a Google Doc. MetaAI didn’t do a bad job. It broke it down step-by-step with simple instructions, even if every one seemed to be “reach out to the manufacturer.” Grok just did it better with a more concrete plan and fallback options.

- Winner: Grok for a more user friendly guide that is also more accessible

6. Advanced Planning

(Image: © MetaAI vs Grok)

AI models are very good at planning, especially ones like Gemini and ChatGPT Search that have live data access. As both MetaAI and Grok also have live access I thought I’d see how well they handled planning a vacation to the Scottish Highlands.

Prompt: “Plan a 10-day trip exploring the Scottish Highlands and Islands for a solo traveler interested in hiking, wildlife spotting, and experiencing local culture. The plan should include:

A suggested itinerary with a mix of mainland and island destinations (must include Isle of Skye and Loch Ness).

Recommendations for scenic hiking trails with varying difficulty levels.

Suggestions for opportunities to observe local wildlife (red deer, seals, birds).

A variety of accommodation options (hostels, B&Bs, and unique stays like glamping or bothies).

Transportation suggestions (public transport, car rental, ferries).

Estimated budget breakdown in GBP, considering accommodation, transportation, activities, and meals.”

Full details in a Google Doc. Similar responses, but as with the other responses, Grok was more personal and engaging. It was generally better overall with more nuance and detail.

- Winner: Grok wins for a more personal and engaging response

7. Education

(Image: © MetaAI vs Grok)

Finally, we’re testing how well AI manages to explain a complex topic to a specific audience. Here I’ve asked it to explain the concept of artificial intelligence to a 12-year-old. This has to include a break down by topic and show everyday examples.

Prompt: “Explain the concept of artificial intelligence in a way that a 12-year-old could understand. Use analogies and examples from everyday life. Discuss the different types of AI and how they are being used today.”

Full responses in a Google Doc. Grok does a much better job of breaking down the concept, splitting it up by different types of AI and using more engaging analogies.

- Winner: Grok’s tone is more whimsical with better use of creative analogies

| Header Cell – Column 0 | Grok | MetaAI |

|---|---|---|

| Image Generation | 🏆 | Row 0 – Cell 2 |

| Image Analysis | 🏆 | Row 1 – Cell 2 |

| Coding Challenge | 🏆 | Row 2 – Cell 2 |

| Creative Writing | 🏆 | Row 3 – Cell 2 |

| Problem Solving | 🏆 | Row 4 – Cell 2 |

| Advanced Planning | 🏆 | Row 5 – Cell 2 |

| Education | 🏆 | Row 6 – Cell 2 |

| TOTAL | 7 | 0 |

This is the first test I’ve run where one model wins outright against another, and it wasn’t even that close on many of the tests. Grok is proving itself to be something special. MetaAI isn’t a bad model, it just isn’t in the same league as Grok.

The analysis for each response has been pretty much the same throughout. Grok simply outclassed MetaAI. Llama 3.2 400b is a good underlying model. It is open-source and powers a lot of applications but Grok is better. That might change with Llama 4 and Grok 3, but for now Grok wins.

More from Tom’s Guide

Source link