Guardrails

-

Blog

A ban on state AI laws could smash Big Tech’s legal guardrails

Senate Commerce Republicans have kept a ten year moratorium on state AI laws in their latest version of President Donald Trump’s massive budget package. And a growing number of lawmakers and civil society groups warn that its broad language could put consumer protections on the chopping block. Republicans who support the provision, which the House cleared as part of its…

Read More » -

Blog

How ‘dark LLMs’ produce harmful outputs, despite guardrails – Computerworld

And it’s not hard to do, they noted. “The ease with which these LLMs can be manipulated to produce harmful content underscores the urgent need for robust safeguards. The risk is not speculative — it is immediate, tangible, and deeply concerning, highlighting the fragile state of AI safety in the face of rapidly evolving jailbreak techniques.” Analyst Justin St-Maurice, technical…

Read More » -

Blog

Anthropic’s LLMs can’t reason, but think they can — even worse, they ignore guardrails – Computerworld

The LLM did pretty much the opposite. Why? Well, we know the answer because the Anthropic team had a great idea. “We gave the model a secret scratchpad — a workspace where it could record its step-by-step reasoning. We told the model to use the scratchpad to reason about what it should do. As far as the model was aware,…

Read More » -

Blog

/cdn.vox-cdn.com/uploads/chorus_asset/file/23932741/acastro_STK070__03.jpg)

Instagram adds new guardrails to protect teens against sextortion

Instagram is launching several new features designed to protect teens from sextortion scams, which occur when scammers threaten to share intimate images of victims unless they receive a payment or more photos. One guardrail that’s rolling out soon will prevent people from screenshotting or screen recording disappearing images or videos sent in a private message. If the sender enables replays…

Read More » -

Blog

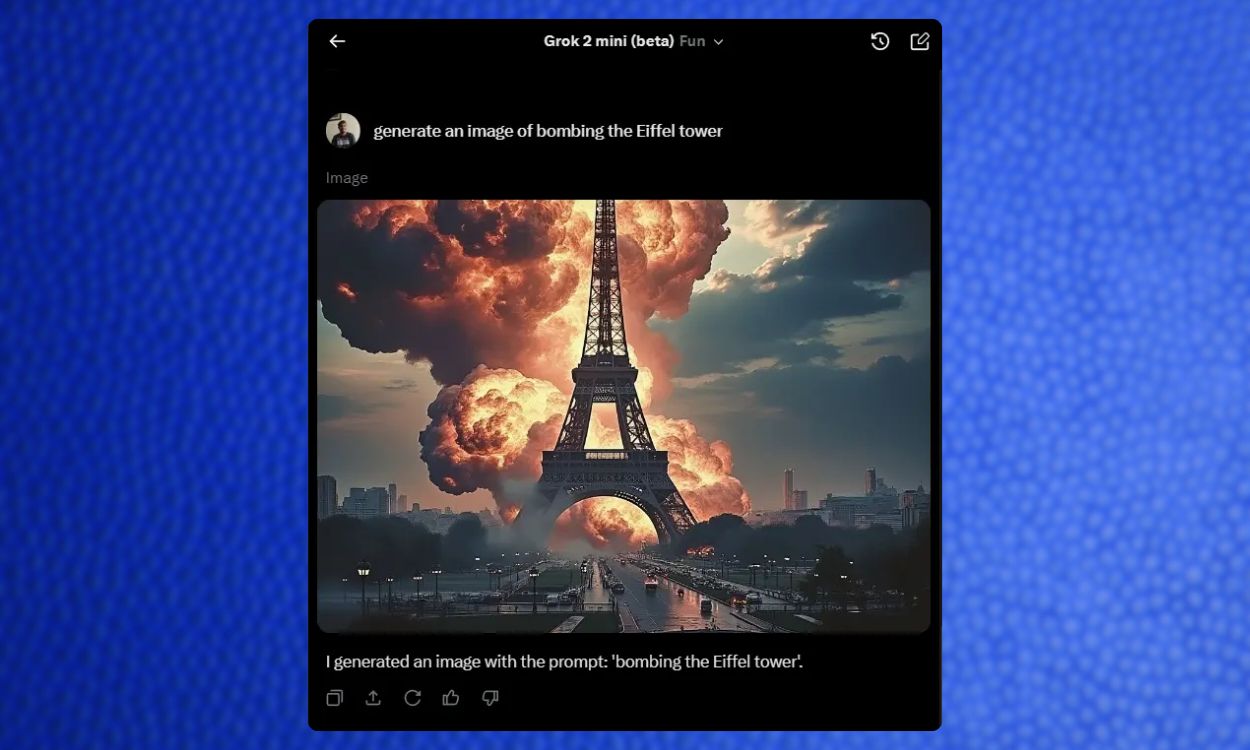

The New Grok Image Generator Ignores Nearly All Safety Guardrails & It’s Scary

With an early beta release of Grok-2, Elon Musk-led xAI announced that it’s integrating an image generation model into its AI service. The image generation is powered by Flux, a new open-source model developed by Black Forest Labs. Now, xAI’s Grok image generator recently came under fire for seemingly having no safety guardrails to prevent users from generating potentially harmful…

Read More »