Google Cloud has announced a new chip intended for AI inference, which it says is more powerful and better for AI requirements but also far more energy efficient.

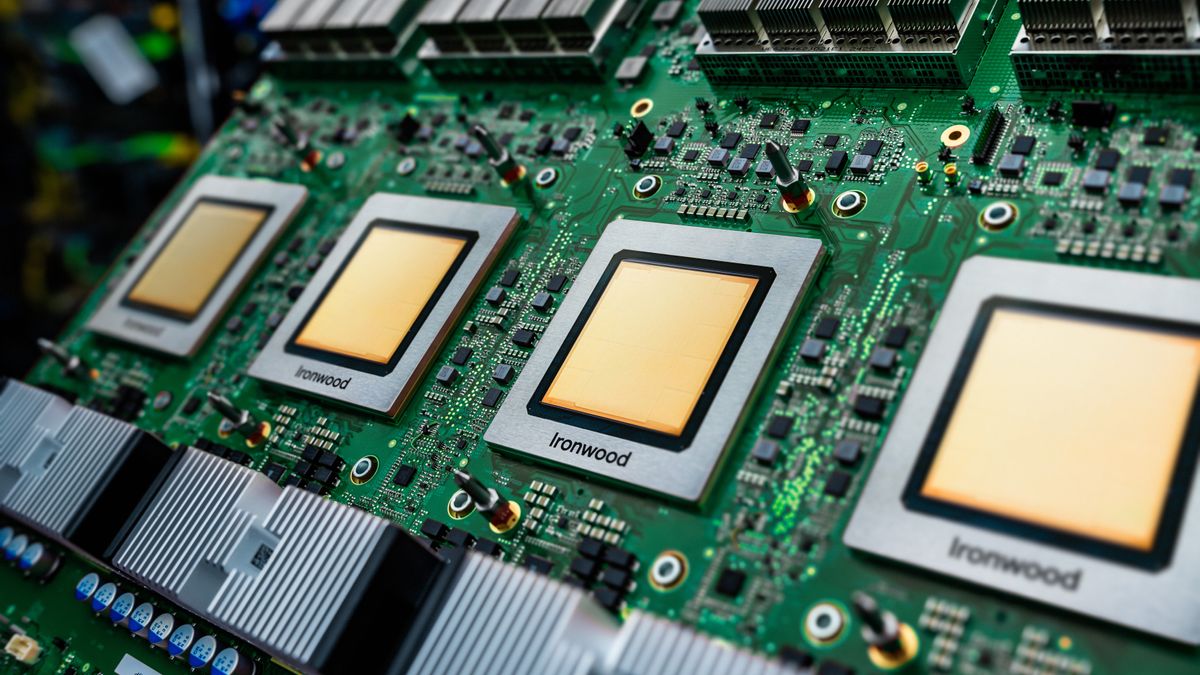

Ironwood, Google Cloud’s 7th generation tensor processing unit (TPU), offers five times more computing power than the firm’s previous-generation Trillium chips.

Each Ironwood pod is capable of 42.5 exaflops of performance, which Google Cloud noted is 24 times the per-pod performance of the world’s fastest supercomputer El Capitan.

Ironwood will be available in two configurations: a 256 and 9,216 chip model, with each chip liquid-cooled, equipped with 192GB of memory, and capable of 7.2Tbits/sec of bandwidth.

The announcement comes as Google Cloud works to meet growing demand for AI inference across its cloud ecosystem. It cited the demanding workloads of frontier AI deployment – including mixture of experts and advanced reasoning models – as driving enterprise demand for intense data computation with little to no latency.

Google Cloud also said the rise of AI agents, which work autonomously and proactively within an organization’s cloud environment, will greatly drive up inference demand.

For example, a security agent running 24/7 will input and output data constantly as it scans for malicious activity, putting constant strain on cloud infrastructure.

The announcement was made on the opening day of Google Cloud Next 2025, the firm’s annual conference at which AI is once again taking center stage.

As generative AI demand has risen and models have become sophisticated, the energy draw on data centers has been an area of concern.

In a briefing with assembled media, Amin Vahdat, VP & GM of ML, Systems, and Cloud AI at Google Cloud, stated that the past eight years have seen demand to train and serve AI models increase by a factor of 100 million.

With this in mind, Google Cloud developed Ironwood to be its most energy efficient ever, offering 29.3 teraflops per watt – a nearly 30x improvement over Google’s first TPU from 2018.

Google Cloud uses its TPUs to train and inference its own Gemini models and credits this for its competitive cost to performance ratio. It noted that Gemini Flash 2.0 can provide 24x more inference capability per workload than GPT-4o and five times more than DeepSeek-R1 – a model known for its extremely low cost.

Hypercomputer expansion

Beyond the hardware announcements, Google Cloud has also unveiled sweeping advancements for ‘AI hypercomputer’, its cloud architecture for compute, networking, storage, and software.

Chief among this is Pathways, the firm’s internal machine learning (ML) runtime developed by Google DeepMind, which will now be made available to Google Cloud customers.

Pathways allows multiple Ironwood pods to connect to one another and work in tandem, allowing enterprises to leverage many tens or even hundreds of thousands of Ironwood chips to operate as one massive compute cluster.

Rather than simply connect the pods together, Pathways can dynamically scale the different stages of AI inference across different chips, to maintain low latency responses and high data throughput.

Google Cloud stated this has transformative potential for the future operations of frontier generative AI models in the cloud.

The firm also announced new capabilities for Google Kubernetes Engine aimed at supplying customers with the right resources for AI inference. GKE Inference Gateway provides model-aware load-balancing and scaling, which Google Cloud said can reduce serving cost up up to 30% and tail latency by 60%.

To assist customers further, the firm is also releasing GKE Inference Recommendations in preview, which can configure Kubernetes and infrastructure based on a customer’s AI model choice and performance targets.

In addition to its own TPUs, Google Cloud customers can access Nvidia hardware via the cloud giant’s A4 and A4X virtual machine (VM) offerings.

Google Cloud announced general access to Nvidia’s highly capable B200 via its A4 VMs at Nvidia GTC 2025 last month and customers will now also be able to access its GB200 chips via the A4X in preview.

MORE FROM ITPRO

Source link