Key Takeaways

- Upgradable VRAM in graphics cards was explored in the past but poses complexity and risk in modern times.

- Some modders have upgraded VRAM on modern cards, but it requires skill, equipment, and poses potential damage risk.

- Challenges for upgradable VRAM cards include heat management, standardization, and collaboration, making it an unlikely cost-effective choice.

If you have a powerful graphics card with too little VRAM, you don’t have much choice other than buying a whole new card. So why not simply make a graphics card that’s designed to have upgradble VRAM? It turns out things are not quite so simple.

ATI Had Graphics Cards With RAM Slots

The idea of a graphics card that you can buy now and upgrade later isn’t new. ATi (before being bought out by AMD) had a series of graphics cards with a slot where you could put in an additional SGRAM module.

So, you could buy a 4MB (yes, you read that right) card now, and later, if it turned out you need more, you could double your memory with a drop-in upgrade. I don’t know how many people actually took advantage of this feature, but there have definitely been mainstream cards with this option.

Some People Have Managed to Upgrade VRAM on Modern Cards

Apart from the simple fact that cards with upgradable memory did exist decades ago, is it even feasible to do this with modern cards? The short answer is “yes” and modders have already done so with factory cards. Replacing the existing memory chips with higher capacity modules.

This proves that existing cards are basically ready for in-line VRAM upgrades in principle, but of course, it’s not easy. While they might have no problems on the firmware and software side, replacing the relevant parts on an existing card is tricky and requires advanced skills and equipment. So it’s not something generally worth doing, especially for a card that’s working just fine, since this procedure always runs the risk of damaging the card you’re trying to modify.

There Are Too Many Challenges

In principle, there’s nothing standing in the way of a card designed to have upgradable VRAM, but that doesn’t mean it will be cheap or easy. There are several challenges I can think of that would make it hard to justify, and this has to be weighed up against whether the people who buy these GPUs would find it worth the extra cost.

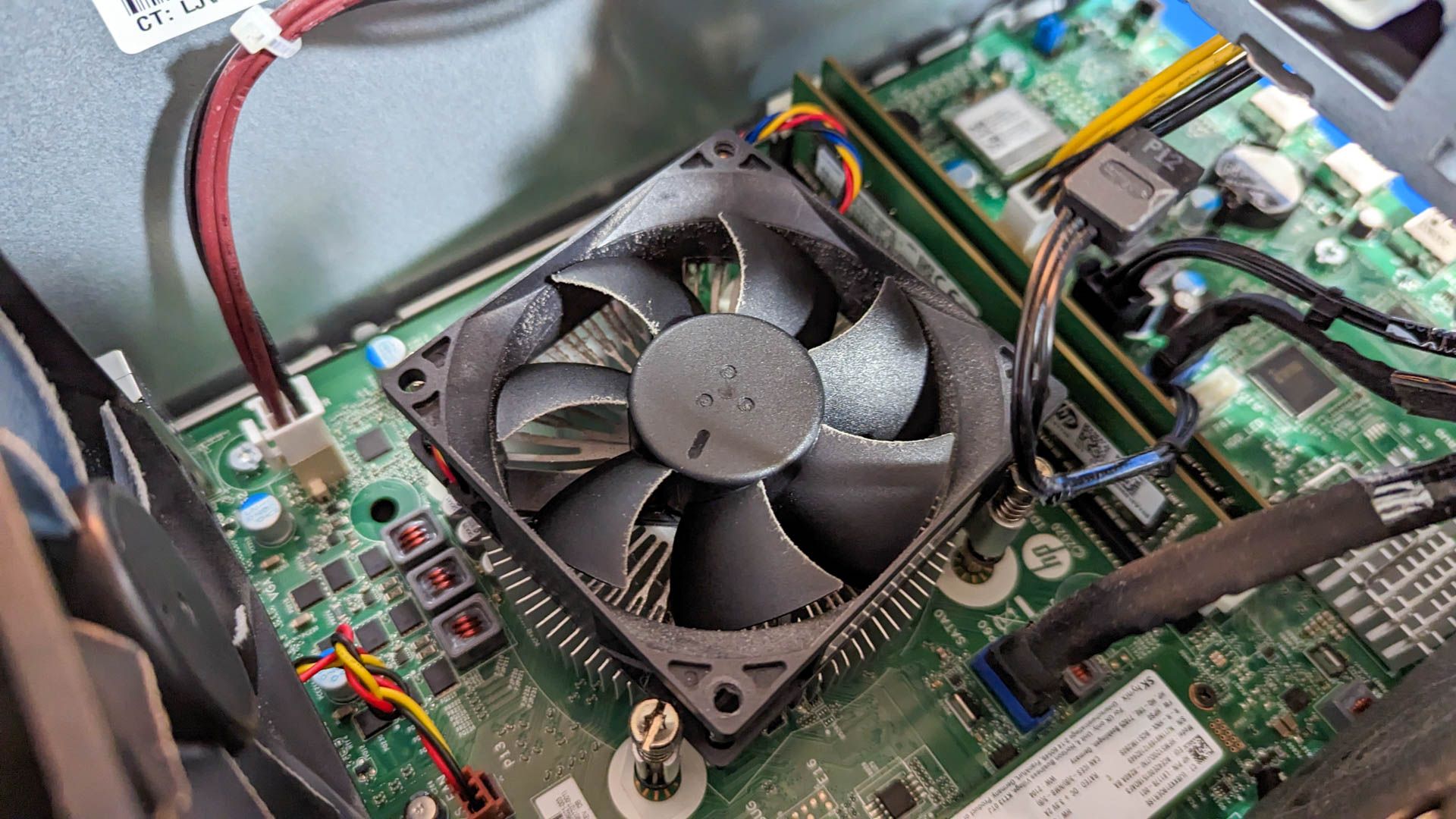

Unlike those old ATi cards, modern GPU VRAM runs hot and needs active cooling. So if you wanted to create a card that has some sort of upgradable modules, you have to design it so that the memory has a separate cooling system. Right now the standard design cools both the GPU and its memory modules and other components with a single heatsink and fan assembly. So you can see this will already complicate designs and have a knock-on effect when it comes to costs.

You also need some sort of standardized memory module format, just as we have SODIMMs for laptops, or LPCAMM memory modules. Developing a VRAM module standard would take years and the cooperation of many large industry leaders. Making a proprietary solution for one brand of card would also wipe out any technical benefit thanks to the high cost proprietary solutions often have. PS Vita memory cards anyone?

This isn’t even an exhaustive list, just a few obvious challenges. While there’s no technical reason it can’t happen, I don’t see it being worth it for anyone. A more important question is why leading GPU makers sell cards without adequate VRAM. Why are we still getting GPUs in 2024 and beyond with only 8GB of memory? After all, memory is relatively cheap.

The answer has more to do with the state of the GPU business than anything technological. NVIDIA owns almost the entire discrete GPU market, with AMD playing a distant, barely-audible second fiddle, and Intel’s discrete GPU market share has literally dropped to zero. In this market context, it makes no sense to offer us higher VRAM amounts on mainstream cards. NVIDIA would much rather we buy a higher tier card instead.

This is also, in my opinion, why AMD offers larger VRAM sizes than NVIDIA on its mainstream cards. It can’t compete in raw performance or features, so it’s trying to improve the value proposition in other ways.

Hardware Will Get Less Upgradable, Not More

While it’s always going to be my wish that the hardware we spend so much money on will offer some way to make it better down the line, overall, that doesn’t seem to be the trend. While projects like modular laptops get some media attention, I don’t think modularity is the future. With slimline laptops, mini PCs, handheld computers, and other similar devices putting more components into smaller packages, it seems the future might be more tightly integrated, and who knows what that means for desktops as a whole, much less the vain hope that GPUs could be a little more flexible.

Source link