I just tested the new iOS 18.2 Apple Intelligence features — and I’m surprisingly underwhelmed

When talking about Apple Intelligence, it’s important to remember that Apple’s efforts to bring more artificial intelligence to its mobile device is a work in progress. Where we are today is not where we’re going to be a year from now — or even in a few months, given the pace at which Apple is rolling out updates to iOS 18, iPadOS 18 and macOS Sequoia. The important thing is that Apple seems to have a very clear vision of what purpose AI serves on its devices, and it’s building up the tools to deliver on that vision.

And I’m glad that Apple views things like the just-released iOS 18.2 update as just another step in that process, with tools that are going to improve over time. Because I find iOS 18.2 to be a bit of a yawner with some marquee features that really don’t bring that much to the table.

That probably says less about Apple’s efforts and more about what I use my phone for and how I see AI assisting that. It’s not that the additions of iOS 18.2 are badly implemented or misguided — they just don’t offer capabilities that I want.

Image Playground: What is it good for?

At their best, Apple Intelligence features either save me time or save me hassle. With October’s iOS 18.1 update, for example, I loved the summary tools that arrived in Mail, especially the feature that generates summaries long email exchanges, giving me just a simple paragraph listing the key points. That’s a real time-saver for me, as is the summary text that now appears next to messages in my inbox. (My only complaint there is that I wish Mail showed the whole summary instead of cutting it off after a couple lines.)

There are a number of features like that courtesy of iOS 18.1. The Phone app offers a similar time-saving summary feature for the phone call transcripts it can create from the recordings it now supports. Memory Movie is hardly an essential addition to the iPhone, but it does take a task that would take me hours in iMovie — assembling a slideshow from images and videos in my photo library based on my prompts — and whips out a finished product in less than a minute. These aren’t parlor tricks — they’re useful tools.

I’m not sure I can say the same thing about Image Playground, which can generate an image based on the prompts you provide — text, mostly, but you can also use a photo from your library to get Image Playground’s creative juices flowing. That’s a fine concept, but in practice Image Playground leaves a lot to be desired.

It’s not that Image Playground’s output is bad, just limited. The app is at its best when you limit its output to portraits, but you’ve got only two options — animations, which makes everyone look like they’re an extra in a Pixar movie, and illustration, which has more of a comic book feel. With no additional styles, Image Playground’s output begins to look the same, and the novelty wears off fast.

It also doesn’t help that once you use Image Playground to generate something, there’s not a whole lot you can do with it. Oh, you can text one of your portraits to a friend in Messages or past the image to a relevant Note, but you can’t really resize things beyond the square layout all Image Playground output is limited to. There’s not even support for that Visual Look Up feature that lets you press and hold on a image to lift it out of its background.

If good Apple Intelligence features save me time, Image Playground does the exact opposite. At this point, it’s wasting my time.

Features with some appeal — but not for everyone

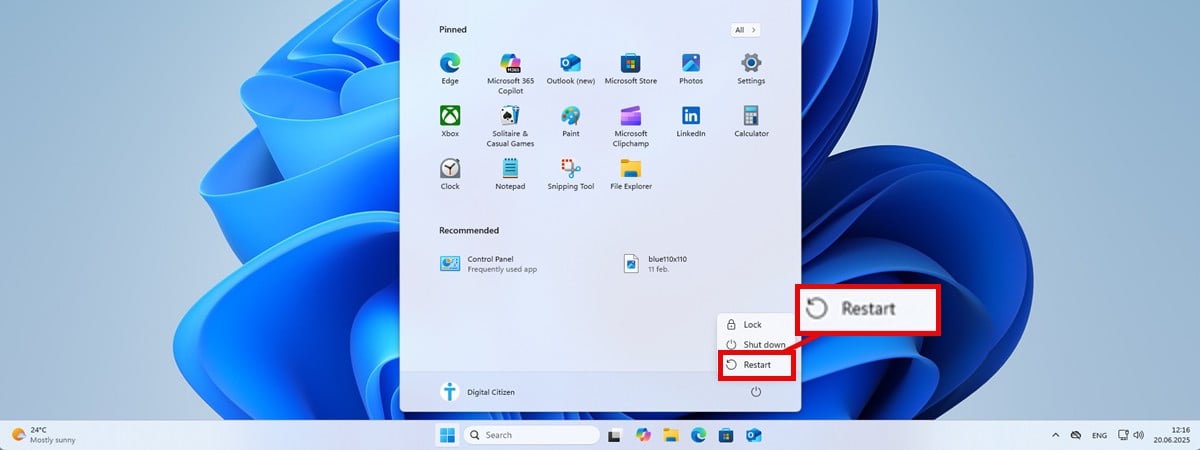

Other Apple Intelligence features in iOS 18.2 fall flat with me for personal reasons. I rarely use emoji in any sort of communication — I can’t stand them, really — so a tool like Genmoji that generates customized emoji from text prompts has no appeal for me.

Even worse, I envision the texts, emails and notes I get from people who do use emjoi are going to become even harder to decipher when I have to unpack the customized symbols they’ve created in Genmoji. Standrad emoji are so small, they’re already hard enough for me to interpret — Is that supposed to be a taco or a gyro? Is that hand gesture meant to be friendly or dismissive? DID SOMEBODY JUST INSULT ME WITH A PICTOGRAM? — I see Genmoji adding a new layer of frustration to my life.

I have a lot of colleagues excited about the integration of ChatGPT into Siri, as they believe it will fill in a lot of gaps in Siri’s knowledge base. Maybe so, but I have strong reservations about OpenAI, the company that makes ChatGPT, and that makes me very reluctant to allow them on to my iPhone. ChatGPT is an opt-in feature, thankfully, and that toggle is remaining off for as long as possible.

I think I might feel differently about the value of the Apple Intelligence features in iOS 18.2 if I had regular access to an iPhone 16. I might at some point, but up to now, I’ve done all my Apple Intelligence testing on an iPhone 15 Pro. That means I can’t use Visual Intelligence, a Google Lens-like feature that only works on the latest iPhones. That’s a pity since my colleagues who’ve tested the feature believe that Visual Intelligence is a real game changer, adding serious heft to Apple Intelligence.

My colleague Richard Priday used the camera on his iPhone 16 and the Visual Intelligence feature to translate signs on the go. he also used it at an art galley to look up information about paintings and artists, essentially turning his phone into a pocket guide book. Another Tom’s Guide writer, Amanda Caswell, came up with the best use of all — turning to Visual Intelligence to count calories. I’ve argued before that Apple Intelligence features, while promising, aren’t powerful enough to justify upgrading your iPhone on their own, but Visual Intelligence sounds like the first capability to make me rethink that stance.

Things can only get better

Again, I don’t want to give the impression that an Apple Intelligence feature I may not care for now is going to stay that way for ever. Things have a way of evolving, especially in AI where models can get smarter and features can be refined. Writing Tools is a good example of that.

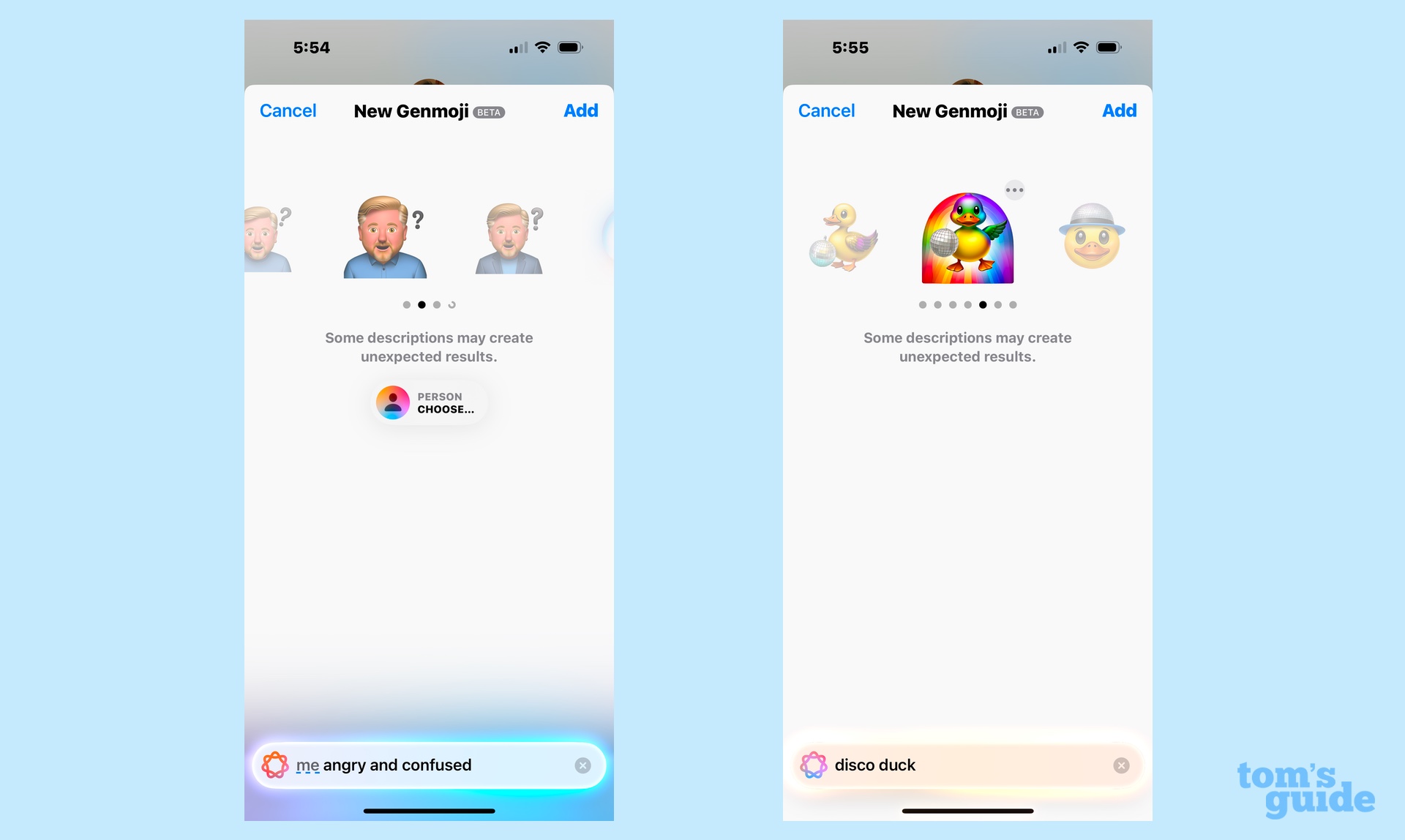

When iOS 18.1 arrived, I got very sniffy about Writing Tools, which I don’t think bring much to the table when it comes to polishing writing. Some capabilities could come in handy — if you’re not used to writing a formal letter, the button to make your writing sound more formal can be very effective in adhering to a very rigid style. But for the most part, Writing Tools seem to take all the individual voice out of your text, making it sound like a computer is handling your writing for you. Not a great impression to give.

iOS 18.2 takes a step to correct this with an addition to Writing Tools. The Describe Your Change feature lets you give specific instructions about what you want in a rewrite — “make this more enthusiastic,” just as an example. I need to give Describe Your Change more of a workout to see how effective it is — I suspect its biggest additions will be adjectives and word choice — but at least it promises great flexibility than the preset options featured in Writing Tools initially. That’s a step in the right direction.

And honestly, not every Apple Intelligence feature needs to be a hit for Apple’s work with AI to pay off. Remember how I mentioned how much I enjoyed the changes to Mail at the start of this article? It would be more accurate to say I enjoy most of the changes like the aforementioned summary tools. Mail also added a redesign that created different inboxes for different types of mail, and I disliked the effect of that so much, I immediately used the built-in feature to revert to a single inbox view in Mail.

My point here is Apple Intelligence is a grab bag. Some of the features you’re going to like, some not so much. For me, a lot of iOS 18.2 falls under the Not So Much header. But that doesn’t mean iOS 18.3, or the updates beyond that, will wind up the same way.

More from Tom’s Guide

Source link